Azure Key Vault Integration with Databricks & ADLS: Visual Guide with Code

- Shantanu Sharma

- Dec 18, 2021

- 2 min read

Updated: Dec 28, 2025

Azure key vault (AKV) service stores keys, passwords, certificates, etc., in a centralized storage. AKV mitigates the risk of exposure of confidential details such as database connection strings, passwords, private keys, etc., in source code which can cause undesired consequences.

Access to a key vault requires proper authentication and authorization and with RBAC, teams can have even fine granular control who has what permissions over the sensitive data.

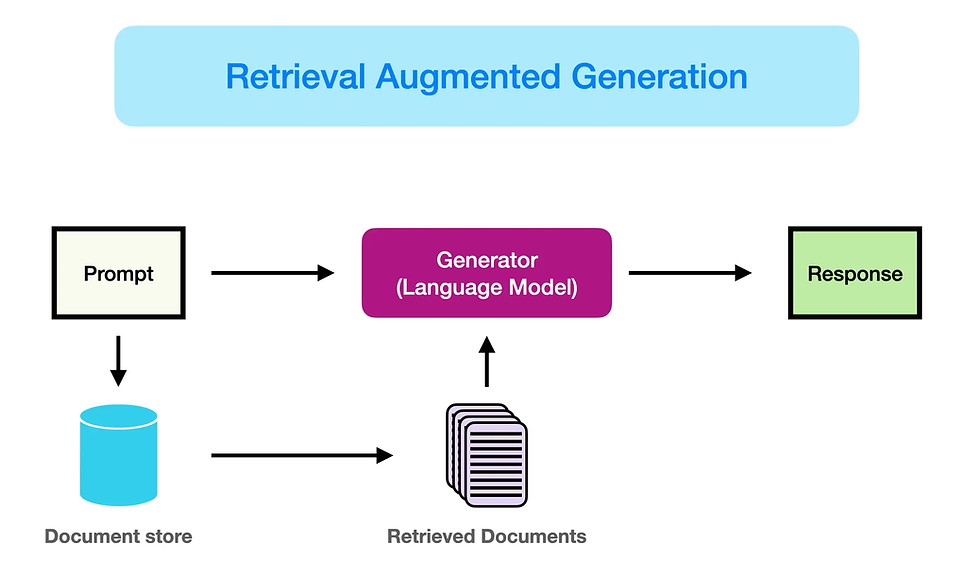

Azure Databricks (ADB) & Azure Datalake Gen 2 (ADLS) integration through Azure Key Vault (AKV)

Here is pictorial view of how internal configuration of AKV, ADLS & ADB get integrated.

We need to perform following steps in order to create secret and utilize the same in databricks notebook:

Step 1 - Create key vault secret: We need create secret in key vault service, it requires azure storage access key (either of key1 or key2 can be used).

Azure storage access key can be found under "Security and Netwroking" section on azure storage resource.

Step 2 - Create databricks secret scope: Next we need to create scope secret in databricks workspace. In azure key vault properties it requires DNS name (vault URI) and resource id, this information is available in azure key vault properties section.

To open "Create Secret Scope" page use following URL and add <databricks-instance>.

https://<databricks-instance>#secrets/createScope

Azure key vault properties (Vault URI and Resource Id) you can find in key vault service under "properties" section.

Step 3 - Utilize databricks scope and key vault secret in databricks notebook: Once key vault secret and databricks secret scope is set. We can use these details in databricks notebook and set spark configuration property.

Here is sample code to set config property:

#set config to use key vault secret

spark.conf.set(

"fs.azure.account.key.<storage-account>.dfs.core.windows.net",dbutils.secrets.get(scope="<databricks-scope>",key="<key-vault-secret>"))Here <storage-account> refers to azure storage account name, <databricks-scope> refers to databricks secret scope and <key-vault-secret> refers to key vault secret.

#read file from datalake

df = spark.read.format("csv")\

.option("header","true")\

.load("abfss://<container-name>@<storage-account>.dfs.core.windows.net/<file-name>") How internals work:

We provide <storage-account> in notebook that refers to refers to azure storage ADLS gen2 account. Additionally we provide two more details (1) <databricks-scope> this is secret scope defined in databricks, it contains information about AKV service (vault URI and resource id) and helps to locate the key vault service which has access key stored as key vault secret. (2) <key-vault-secret> this secret contains access key to access azure storage data.

Comments